Six months ago, I wrote about feeling paralyzed by AI. My co-founder was rebuilding our company with agents while I watched, unsure how to contribute. The fog lifted faster than I expected. But I didn't anticipate what came next.

Nowadays, I check my agents before my email.

That's not a metaphor. Every morning, I open three agentic systems - that I myself built - before I open Gmail. Each functions like a specialized team: status updates, task recommendations, deliverable reviews, working sessions on whatever needs attention. The interactions feel more like managing direct reports than using software.

This isn't a flex about being technical. Six months ago, I couldn't have built any of this. The point is: I'm a business leader who now operates this way. And if I can get here, so can you.

My Three AI Teams

Team 1: Marketing Strategy & Ops Agent

Here's a confession: my co-founder and I have been pretty awful at marketing for ten years. It's not our core strength. We're consultants who know how to deliver, but getting the word out? We've tried and failed more times than I can count.

So I built an agent to make us better marketers.

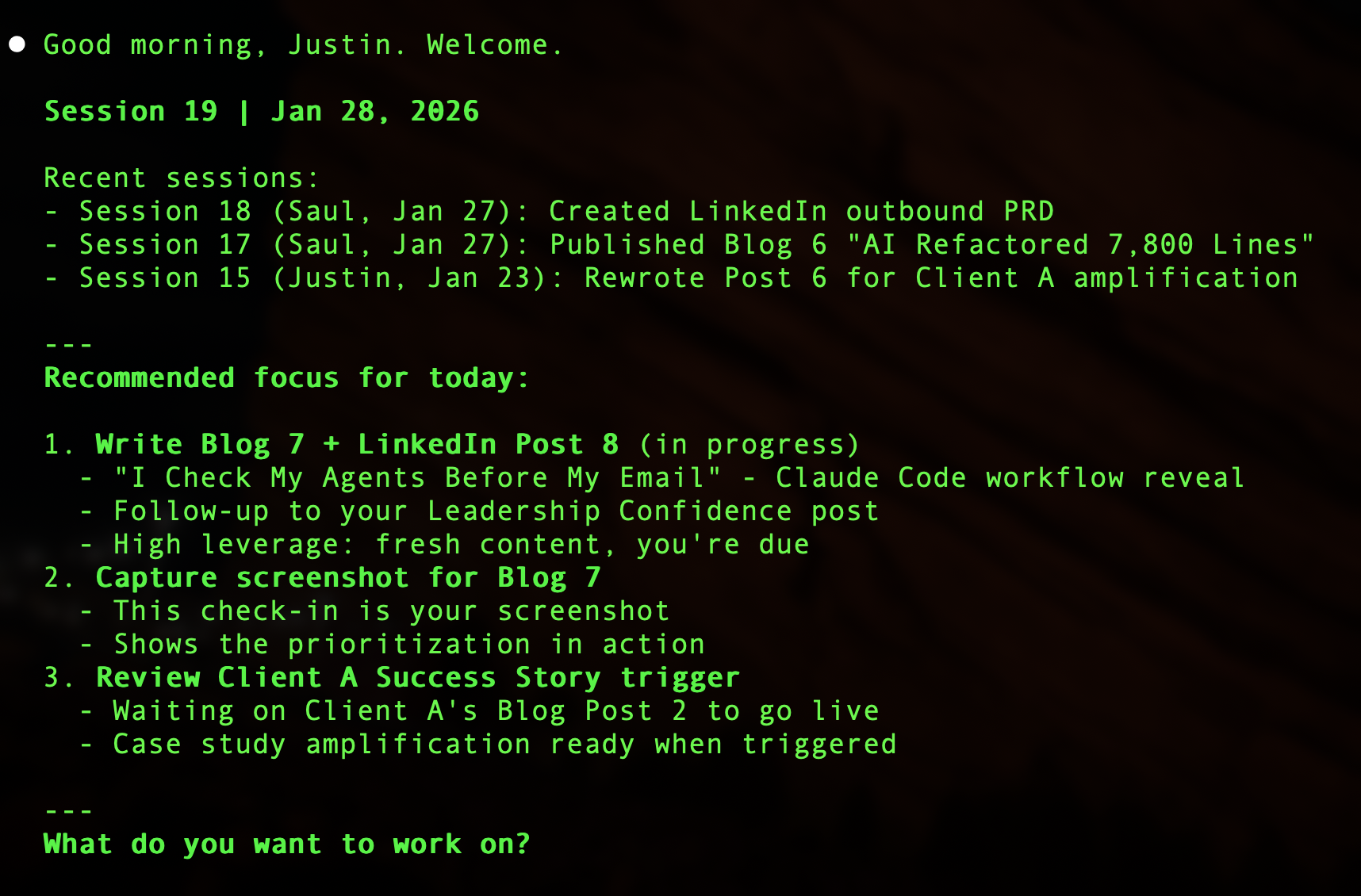

It knows our GTM plan, our content calendar, our target audience, and our voice. When I start a session, it pulls the latest from our shared repo, shows me what's been done since my last session (my co-founder works with it too), and tells me exactly what to focus my limited time on.

This morning's check-in:

The prioritization alone is worth everything. I have maybe 2-4 hours a week for marketing. The agent makes sure those hours go to the highest-leverage activities, not whatever feels urgent.

But it does more than prioritize. When I'm drafting content like this post it runs critiques through different lenses. It pushes back when my ideas conflict with our stated strategy. It remembers decisions we made three weeks ago that I've already forgotten.

We're not suddenly marketing geniuses. But we're consistently executing a coherent plan for the first time in a decade.

Team 2: Consulting Project Agent

Our consulting project agent helps manage client engagements. It tracks deliverables, maintains interview notes, synthesizes findings, and drafts outputs. Think of it as a project manager who also happens to be a junior consultant.

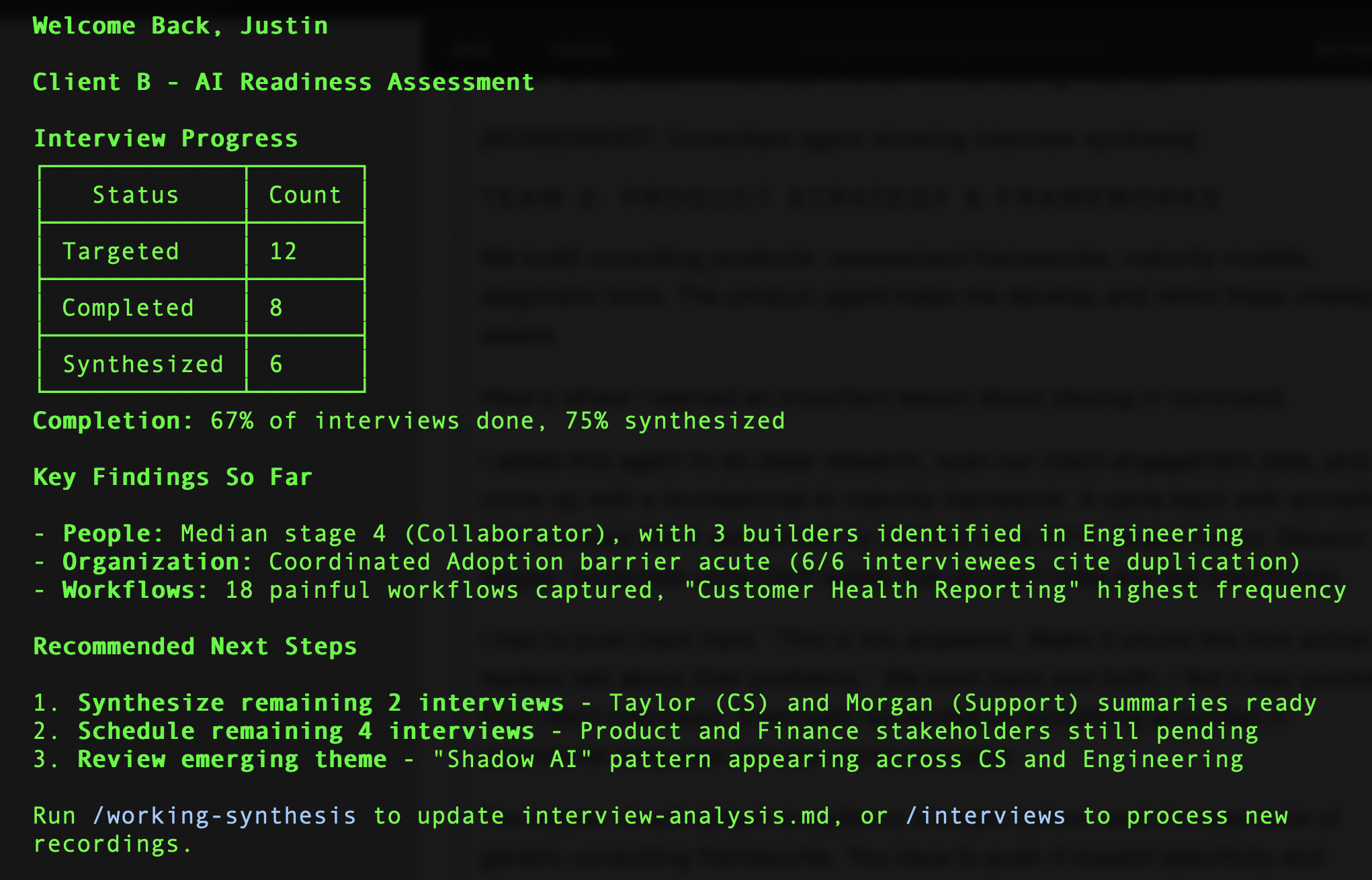

On a recent client assessment:

- Conducted 18 stakeholder interviews (I ran them, the agent synthesized)

- Generated a 40-page findings deck from interview transcripts

- Flagged contradictions between what executives said and what practitioners reported

- Drafted recommendations that built on patterns from our previous engagements

The synthesis that used to take me 4-6 hours now takes 15 minutes of review and refinement. More importantly, the agent catches things I miss. It remembers what the VP of CS said in interview 3 when I'm reviewing interview 11.

Team 3: Strategy & Framework Agent

As a custom AI design and build shop, our "offerings" run on robust maturity models, frameworks and diagnostic tools. The strategy & framework agent helps me develop and refine these intellectual assets.

Working with this agent in particular has taught me an important lesson about staying in command.

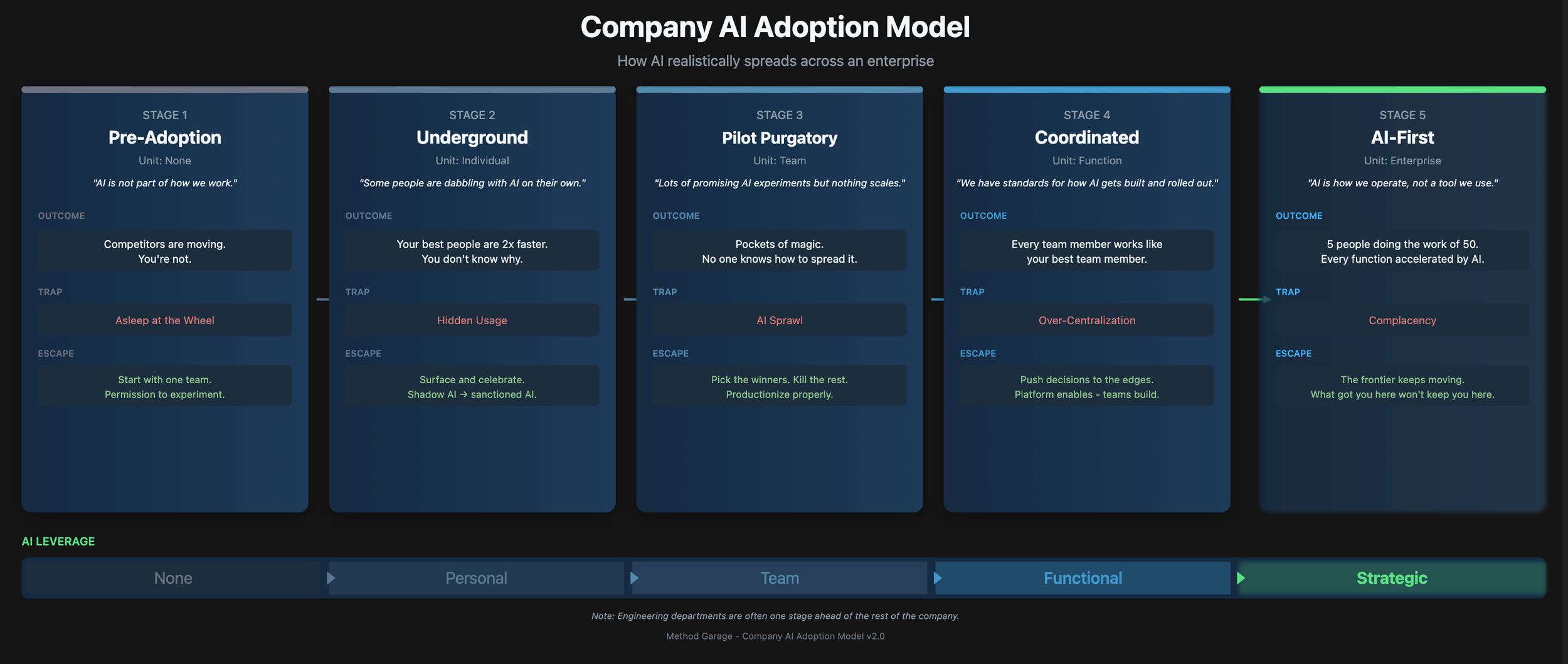

For example, I asked this agent to do deep research, scan our client engagement data, and come up with a foundational AI maturity framework. It came back with something that sounded like it was written by Deloitte circa 2015. Jargon-heavy. Generic stages. Corporate consultant-speak that wouldn't help any real practitioner.

I had to push back hard. "This is way too academic. Make it more relevant to how actual CS leaders talk about their problems." We went back and forth for hours. I fed it real quotes from client interviews and podcast episodes with real business operators sharing their AI maturity journeys. Eventually, we landed on something grounded in practitioner language and real-world examples.

The lesson: AI will default to patterns it's seen before - and it's seen a lot of generic business frameworks. You have to push it toward specificity and authenticity. You have to stay in command.

Here's a high level view of one of our framework iterations.

What This Actually Feels Like

The closest analogy: managing direct reports who have perfect memory.

Last Tuesday, I walked into a client presentation convinced I knew what to recommend. My consultant agent had synthesized 18 interviews and quietly surfaced a pattern I'd missed: the executives and practitioners were telling completely different stories about the same process. I would have walked in there with a recommendation that solved the wrong problem.

That's the real value. Not just speed - though yes, it's faster. The agent didn't just save me time. It saved me from looking, or at least feeling incompetent.

But they're not autonomous. I'm still making the decisions. They're extending my capacity to gather information, synthesize complexity, and execute with consistency. The judgment is mine. The leverage is theirs.

What I'm Not Showing You

These three are my daily drivers. But they're not our only agentic systems.

We also have agents for finance and accounting, development and engineering, security and privacy review, sales research and outreach. Even more, my co-founder has built an entire reference architecture for how we create, maintain and coordinate these systems.

I focus on these three because they represent my core responsibilities: marketing our firm, delivering client work, and building our products. They're where I spend my cognitive cycles. And they're where AI assistance has most transformed how I operate.

For the Skeptical Leader

If you're reading this thinking "that sounds great but I could never build that," I want to push back.

Six months ago, I couldn't either. What changed wasn't just a technical skill - it was a shift in how I thought about the problem.

I stopped thinking "I need to learn to code" and started thinking "I need to clearly articulate how I work." Once I could explain my workflows, my decision criteria, my context - the AI could actually help. The main barrier wasn't technical. It was clarity.

You don't need to build three teams at once. Start with one area where you (or your team) spend significant time on synthesis, coordination, or repeated decisions. Write down how you'd brief a smart new hire on that area. That document is 80% of what you need.

This is what AI-first leadership looks like. Not "I use AI tools." Rather: "AI has changed the structure of my thinking and my work."

The clarity comes faster than you think.

If you're a leader wondering how to actually work this way - not just read about it - shoot me a note. Happy to share more about how we set this up.